Down the Biocomputing Stack ~ Motivation

repurposing great ideas from computing to build for biology

This article is part of a series. The following posts are still in progress but will be released over the coming weeks: an Organic Filesystem, Serverless Biology, Analysis as Code.

Biology is complicated. No one really understands it. But the desire to do so should be clear.

For the next generation of doctors and healers, it holds the key to end disease and suffering.

For the starry-eyed dreamers, it’s a substrate to build new worlds, bring back the extinct, and gain power over nature.

For those afraid of death, it has tapped into our mythical obsession with immortality, catalyzing a community of builders and scientists who don’t want to die.

And for those pure souls driven by curiosity and scholarship, the chaotic dance of metabolism, expression, and immunity within even a single cell unearths enough puzzles to keep one occupied for many lifetimes.

Our desire for a true understanding of biology is unifying. Few other pursuits carry such promise of changing the world.

An assay is human software

To move towards these dreams, the brick layers of scientific understanding rely on empiricism. They ask questions and they build.

A scientist might become captivated by some phenomena and come up with a creative explanation. She would then construct an experiment, carry it out with patience and attention to detail, and arrive at some result to reject or otherwise “not reject” this explanation.

A bioengineer will build upon this understanding to produce an effective cell therapy or a better viral vector. He would create a “library” of biological designs, construct some experiment, carry it out with patience and attention to detail, and arrive at some result to reject or otherwise “not reject” the fitness of his creations.

In both cases, we are dependent on well-crafted experimental design and empiricism to understand biology. Without this we understand absolutely nothing. The assay is a probe lowered into an impossibly complex black box. It spits out glimmers of insight and understanding.

How did we come up with these ‘assays’? And how specifically do we use them to achieve this understanding? It's important to understand the limitations here.

We return to our scientist and remember that she is curious about something she sees and wants to figure it out. If the problem is hard, she will have to create a new way to measure her medium to make progress. She wonders how chemically modified nucleotides are expressed differently from normal ones, but only has access to Sanger sequencing. She will have to figure out how to “read” the chemical modifications on her bases and Sanger won’t cut it. With creativity and a burst of intuition, a new experimental design is born. Our scientist constructs new tooling, to extract a specific type of data from a living system, to answer her question.

What if others have a similar question? If the question being answered is sufficiently general, the procedure is easy enough to explain (and cheap!), then the experiment spreads under a new shared language and protocol. The structure of the experiment is reusable and such ‘assays’ have been immortalized in fancy websites, canonized in textbooks, even sold in ready-to-go kits like a box of tinker toys.

The assay is now a standard issue screwdriver in a bench scientist’s toolbox. Reach for a 10X Kit to understand transcriptional state at a cellular granularity. Want spatial-temporal resolution of the same transcripts? Go FISH.

In a hand-wavy sense, the assay is also a chunk of reusable logic with a standardized set of inputs and outputs. A programmer might call this a “strongly typed function”. One wouldn’t do a miniprep on HEK-293 cells and expect membrane protein in the elution column; the nature of what goes in and what comes out is well defined. The assay is a portable piece of logic that lives somewhere between the pages of a lab notebook, a grad student’s brain, and a furious pipette until the results have been returned.

However care must be taken. The analogy is weak. Crucial to our understanding of the assay is the inconsistency of wet lab biology. Unlike a computer, the human processor (pipette-wielding grad student) is anything but consistent and inputs far from identical. A minute change in buffer concentration from careless measuring or evaporation during a sandwich break could change the entire readout. This process is tedious, painstaking, long, and lossy. Sometimes it's hard to know if results can be trusted at all.

Silicon and biology

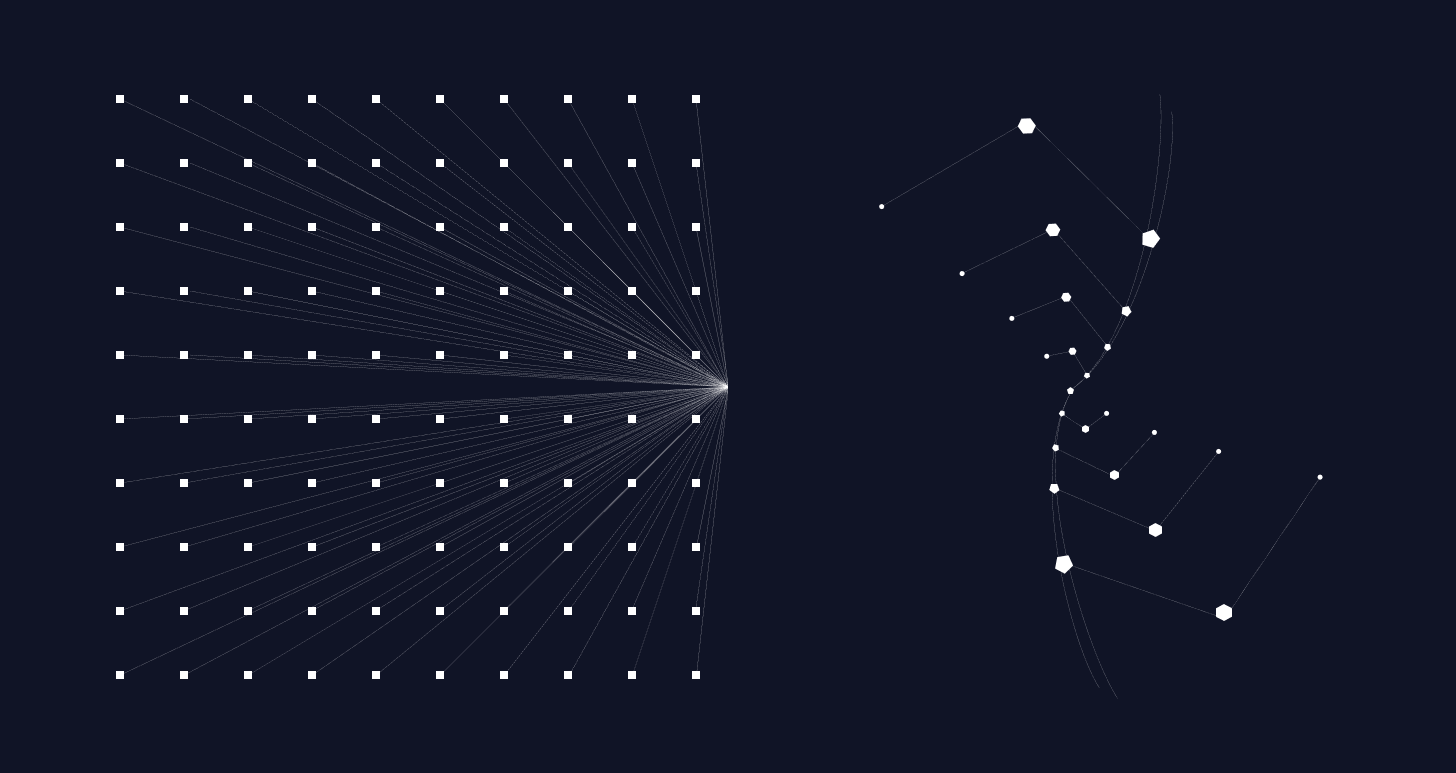

With a messy biological system and unreliable experiments, we need a method to parse signal from the noise. Computers make the noisy and complex data from the lab human-interpretable. Sequencing data floods our servers by the terabytes as fragments of unintelligible letters. Genetic screens spit out convoluted files. Single cell transcriptomics produce constellations of hieroglyphic vectors. Software takes these raw outputs and massages them into a format that a biologist can use to answer questions, eg. a browsable genome or a 3D tissue atlas.

Of course, until a real living system is poked, nothing can be said. The ‘assay’ is still the sole means of elucidating truth in biology. And until someone figures out how to model the dynamics of 60 million interacting molecules in a single cell using the magic of “machine learning”, we’re stuck poking real things. (Ask the Stanford AI department how they plan to use their glorified regressors to solve a graph partition problem with 60 million nodes. Will attention and adding more layers solve that one too?). For the time being, the only way for science to continue on its awkward random walk towards understanding is doing good science. Not computer science. Yet. Go figure.

However, while not a wholesale replacement for the wet lab, the importance of software as a complement to good experimentation cannot be understated. It is now a crucial and expected component of biological R&D. The proliferation of dedicated bioinformatics cores at universities and companies is a testament to this point.

New Tooling for Biocomputing

This intersection of computer science and biology has bred a number of fuzzy sub-disciplines -- eg. computational biology, bioinformatics, biostatisticians -- and has become the foundational thesis for hundreds of new biotechs. Yet the tooling has not kept up.

Our ad engineers have built revolutionary frameworks on their noble crusade to make us click on weird things that we don’t want online. Yet bioengineers are met with atrocious code quality, dependency nightmares, and a general lack of specialized tooling. I’m sure the irony of the situation hasn’t fallen on deaf ears. The crisis of reproducibility is not limited to the bench while the mighty are eating from a software cornucopia building the metaverse.

A dramatic overhaul in computational tooling for biology is needed -- systemic, bottom-to-top -- that much is abundantly clear. More than just tooling, a new style of software infrastructure should be constructed, where every feature is tailor-made to support the analysis of living things.

To this end, we propose a new biocomputing platform. To understand how it works let’s walk through each of its components. Together we will deconstruct its architecture and understand how to repurpose great ideas from computing to assemble each module from scratch.

The Organic Filesystem

Starting with the filesystem, we’ll learn how we manage the complexity and sheer space requirements of biological data with an intelligent proxy over cloud storage. Biodata has an enormous footprint and highly heterogeneous structure. Its sheer size and velocity of growth has forced us to consider new types of storage infrastructure. Its complex structure requires bespoke pre-processing that is difficult to manage.

In 1972, Gordan Bell recognized that the success of the multiprocessor would be enabled by miniaturizing its constituent microprocessors. The power of the device in aggregate grows as its components become cheaper and more simple.

In 1988, David Patterson roughly extended this idea to storage systems. Recognizing that more robust and performant storage systems could be assembled from an array of very cheap components, his RAID system was the impetus for file virtualization, an approach where filesystems can be constructed over highly distributed disk arrays for performance, cost, and reliability. The underlying physical location of the file is unimportant, they can be intelligently pre-processed automatically, and rich metadata about its content can be surfaced. The file is now a software contract.

To build a biologically literate storage system, we will leverage file virtualization to build an organic filesystem. One that will grow to absorb the raw scale of biodata as it continues to explode and intelligently parse multi-omics data transiently.

Continue to the organic filesystem. (Coming soon…)

Serverless Biology

We will then learn how to manage the resources and costs associated with processing such vast volumes of data, understanding how to orchestrate diverse computing environments, parallelize samples, and slash costs while also increasing reliability.

The rise of cloud computing in 2008 changed the relationship between the organization and computing infrastructure. No longer would a team have to install server racks and hire IT expertise to build software applications. Rather servers could be outsourced to remote data centers and scaled within hours to meet shifting demand

We are in the early innings of a more disruptive shift — that of serverless computing — where computation is completely detached from the physical silicon that will execute it. Code can be written with the illusion of infinite resource supply and the orchestration of computers to run it will happen automatically. The user will only pay for resources needed to run that code, nothing more.

Armed with serverless computing, we can dynamically provision seas of computers, 1000s of cores and terabytes of memory, to process our biodata. And only pay for what we need.

Continue to serverless biology. (Coming soon…)

Analysis as Code

We will then explore new ways to organize and write our workflow code using a new framework dubbed Analysis as code (AaC), so that our logic is versioned, portable to any computer on our distributed medium, and exposed through a user interface to biologists in the lab.

As software systems have grown more complex over the past decade, sprawling cloud-native infrastructure has been tamed by a practice called Infrastructure as code. We have been able to manage and define computing clusters and databases as a set of files. This process is human-interpretable, easy to version and abstracts the deployment of tedious services.

The proliferation of complex and highly-specific bioinformatics workflows within labs and companies demands a similar practice. The user interfaces that biologists interact with should be automatically generated from code files. These same files should also compile into portable and containerized workflow logic. The entire end-to-end analysis is now defined as a set of files in version control.

Continue to analysis as code. (Coming soon…)

For scientists focused on answering more questions or steely-eyed entrepreneurs building biotechs from scratch, a next-generation biocomputing platform will accelerate the tempo of research and engineering. It is a place to store, analyze, and visualize the results of assays for clarity and insight so that time and energy can be focused on taming biology.

And so down the biocomputing stack we go.